“With artificial intelligence, we are summoning the demon”- Elon Musk

“The development of full artificial intelligence could spell the end of the human race”- Stephen Hawking

Type in “ai powered” into Google and here’s what the search engine suggests:

Artificial intelligence has been viral in the field of technology for a while and for good reason; it’s winning board games against grandmasters, curating what we should shop/watch/type for us and even talking to us. Understandably, this has spurred fears (backed by some influential figures) that AI is growing too powerful and will take our jobs, or even result in our extinction.

Only one of those fears is true in our lifetimes, and it may perhaps be for the better. Like all disruptive technology, AI has its advantages and disadvantages — but these haven’t been made clear to those of us who didn’t learn about data science or software engineering. The key to determining the true threat (and value) that AI presents lies in understanding the state of AI innovation today.

What is artificial intelligence?

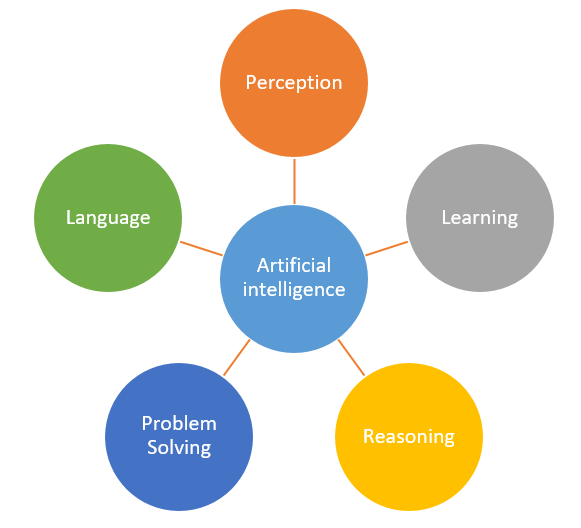

Artificial intelligence is the ability of a digital computer to perform tasks commonly associated with intelligent beings most notably (but not limited to) learning, reasoning, problem solving, perception, and using language [1]

So, intelligence isn’t just being able to do one thing really well — it has many components and being good at one particular task (like playing chess) doesn’t indicate intelligence overall. Rather, the goal of any simulated intelligence is to achieve a goal without human interference.

As human intelligence is multifaceted, true artificial intelligence that aims to replace it must also be as well. When AI is able to learn any intellectual task that a human being can do, it is referred to as artificial general intelligence (AGI). The endgame is known as superintelligence — when AI surpasses humans in overall intelligence.

Learning

How does a machine learn? Of the various components of intelligence, learning from past experiences sticks out as the most important one. If you are able to continuously adjust your actions and improve over time, that makes for a pretty intelligent program. There are three different types of learning; supervised, reinforcement and unsupervised [2]. Note that these can be combined, but for the purposes of this article, we’ll stick to these three main ones.

Supervised learning involves being guided by a ‘teacher’ who provides sample inputs along with the desired correct output, with the machine then mapping the inputs and outputs based on a ‘model’ (equation).

Reinforcement learning is similar to training an animal using a carrot and a stick. AI’s behaviour is reinforced (‘rewarded’) when it successfully completes an objective and discouraged when it fails; it uses feedback to train itself.

Unsupervised learning is the most difficult and yet the goal of artificial general intelligence. There is no teacher or reward-based system in this one; the machine simply has to figure things out on their own, given some unlabelled, unclassified data. This is how babies learn early in life about abstract concepts like gravity (a ball will fall down if it is not supported).

Where we’re at

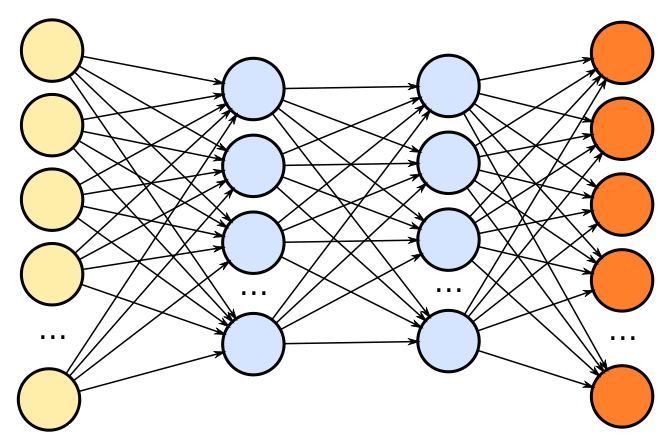

We’ve done pretty well here. This field is where the majority of AI breakthroughs have taken place and where most AI buzzwords like “machine learning” and “neural networks” originate. Machine learning allows programs to learn on their own (unsupervised and reinforcement learning) instead of being explicitly programmed to do so (as is the case with supervised learning).

Deep neural networks allow machines to be modeled based on ‘neurons’ (just like our brain) — each neuron in a machine can represent a particular activity (or computation). Certain pathways that lead to desirable results are strengthened while others that don’t are weakened [3]. This means machines are gradually becoming like our brains; who knows, this might even lead to irrational results as our brains can sometimes do.

Reasoning

Reasoning is the ‘common sense’ of a machine. It’s the ability to make conclusions based on data, through the processes of induction or deduction [4]. There’s so much data out in the world, but without the ability to draw inferences based on it, it’s really useless at answering new questions.

Where we’re at

Machine learning is great for modeling relationships where the inputs and outputs are clearly defined. The thing it’s not good at is finding new relationships between variables that haven’t already been specified. If a machine knows that an object it sees is an apple, it must specifically be told that it is also a fruit, in every instance where it sees an apple. The machine can’t arrive at these conclusions itself.

This is where Deep Reasoning comes in, using relational networks to determine relationships between data points [5]. This is still in its infancy, however. Until we innovate in this space, just having more data will not make AI more effective than it already is. We would need data on absolutely every relationship in the world which is unthinkable; instead we need to learn how to work with the data we have available; doing more with less. Machines severely lack common sense today; but I don’t think you needed this article to know that.

Problem solving

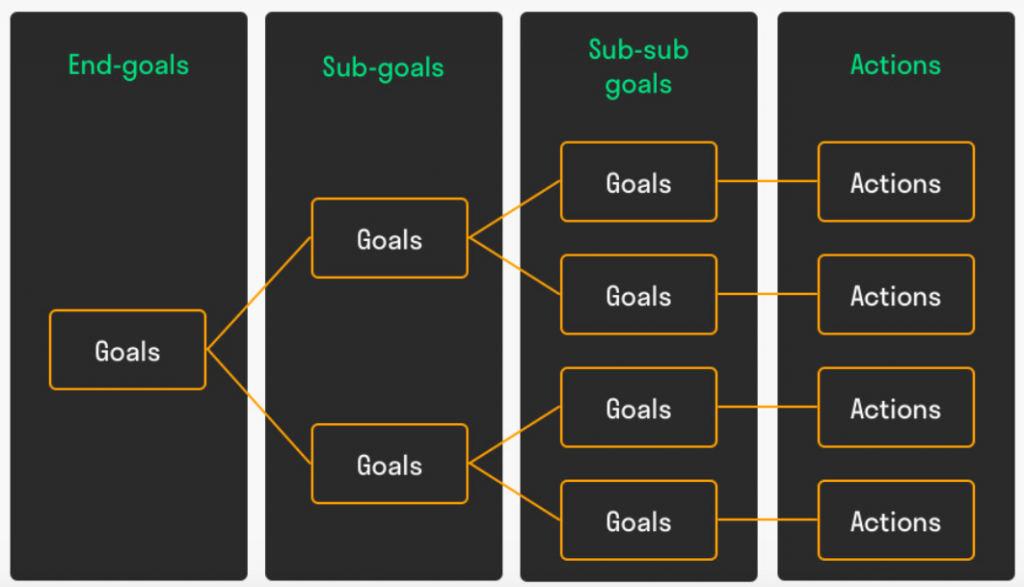

The whole point of employing AI is to solve a problem in order to achieve a goal. That is, given some input, return an output helpful to the task at hand. Any old calculator can solve simple maths problems for us, but the key is to achieve general-purpose problem solving; a machine that can solve a wide range of different problems, instead of a select few.

Where we’re at

We don’t yet know how humans truly approach problem-solving. In an effort to model something close though, AI problem solving is largely captured by a series of nested if-else statements along with holding a ‘working memory’ of the current situation [6]. If a certain condition is met, do this, else, do that; and then update the situation in working memory. Then, move on to a new if-else statement until the problem is solved. As you can tell, you’d have to model every possible circumstance with an if-else statement, making general-purpose problem solving extremely difficult. Every problem has to be deterministic in nature. We are not close in this area.

Perception

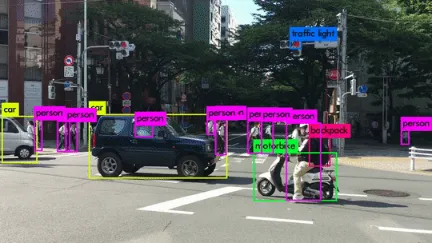

Perception is all about understanding the environment by categorising sensory data (as inputs) into judgements. These are like the ‘senses’ of a machine that provide context to a machine’s behaviour. If a robot vacuum cleaner detects a wall, it will stop just before it reaches the wall and turn in a different direction.

Where we’re at

From robot vacuum cleaners to self-driving cars and even smart security systems, algorithms are increasingly able to utilise sensor data in a meaningful way. However, the bottleneck lies once again in interpreting and judging the sensory data. While neural networks are enabling the ability to learn and categorise different sights (image recognition) and sounds (speech recognition), the key to higher-level perception lies in contextual awareness; a given set of input data can be perceived in several different ways depending on the context and state of the perceiver [7]. A person walking in front of your car might be an emergency or perfectly fine depending on the colour of traffic lights and whether your car is moving. In some ways, perception is the real ‘human’ bottleneck compared to the other components.

Language

Machines need to be able to understand humans and communicate with them. Many programs can spit out English at us (like the Magic 8 Ball), but the hallmarks of true intelligence involve being able to formulate an unlimited number of different sentences in a limitless set of contexts rather than a specific few limited to a finite set of contexts. An AI needs to be able to genuinely understand the rules of linguistic behaviour.

Where we’re at

This is where Natural Language Processing (NLP) algorithms come in. Machines use syntactic (understanding how words are arranged in a sentence) and semantic analysis (understanding the meaning behind a sentence) to understand humans [8]. Machines do reasonably well at the former, but understanding meaning is still very much in its infancy. If I said “That was great”, am I being genuine, sarcastic, or perhaps ignoring you with filler conversation? Notice how something like sarcasm (with underlying meaning) is extremely difficult for a chatbot or a Google Home to understand.

Overblown fears

“By far the greatest danger of artificial intelligence is that people conclude too early that they understand it”- Eliezer Yudkowsky

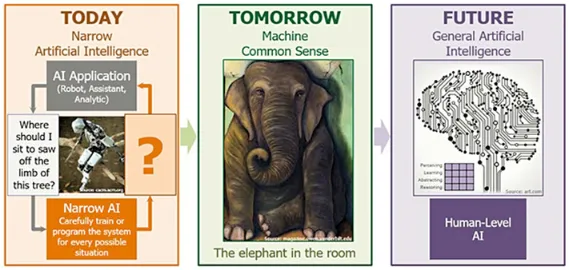

It’s evident that to model artificial general intelligence, we need to integrate all of the aforementioned components into some semblance of a program. That is, this program needs to be able to learn, reason, solve general problems, perceive (and operate in) its environment, and communicate.

So, all these breakthroughs that we’re seeing about on the news are for specific use-cases (narrow AI) rather than integrating the intelligence that we already have so that we can flexibly apply them to all use-cases just as humans can. These intelligent programs don’t yet know how to talk to each other and we have made virtually no progress down that crucial path. The only evidence we have of such a structure exhibiting this kind of intelligence is the brain. The trouble is, we don’t even know how to model a basic cell, or even a worm (with 300 neurons — OpenWorm [9]) let alone anything close to the brain’s power. And if there is a structure outside of the brain that can exhibit general intelligence, then it is as alien to us as, well… aliens.

Then, the current state of AI is like a farm with some great tools that still need a farmer. We’re nowhere near the point where the tools can run everything independently, or there is only one tool necessary.

Mass extinction- when and how?

Worrying about AI evil superintelligence today is like worrying about overpopulation on the planet Mars. We haven’t even landed on the planet yet!- Andrew Ng

Everyone’s so fixated on the day we achieve artificial general intelligence that they’re not concentrated on when or how we’ll even get there. Ground zero is at the very least decades away; it’s like we’re just discovering the gears and components somewhat, but a full-fledged engine or even a prototype car is a long way away, and most likely won’t happen in our lifetimes.

So, relax. There isn’t some mad scientist/engineer that can accidentally create a monster, killer AI that will end us all.

Mass unemployment or higher quality work?

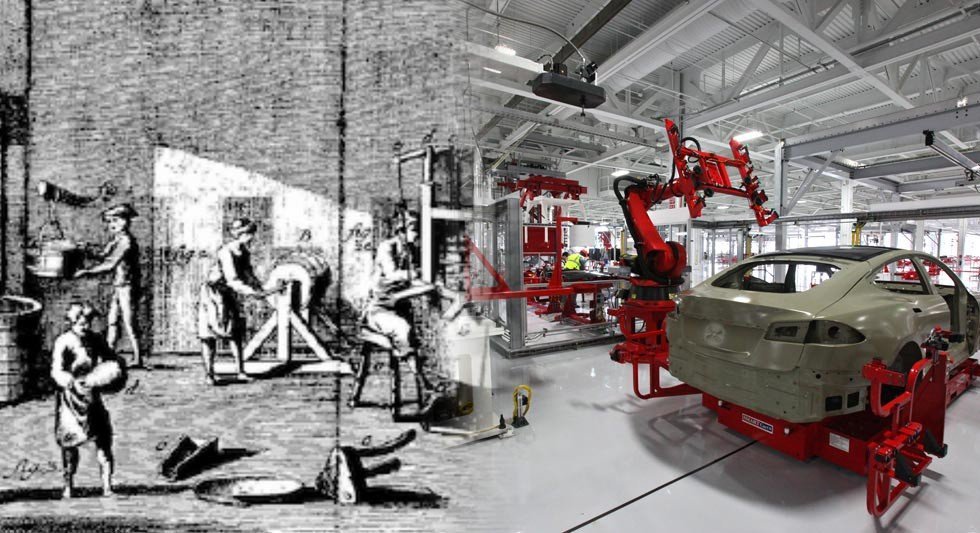

“The question is not whether automation going to eliminate jobs. There is no finite number of jobs that we’ve been sitting around dividing up since the Stone Age. New jobs are being created and they’re usually better and more creative jobs.- Naval Ravikant

Human work has been replaced with machines since the Agricultural Revolution (or arguably even before that). Interestingly enough, delegating human labour to machines resulted not in fewer jobs, but more. Productivity increases allow for growth, which allows for the creation of better quality jobs [10]. We’ve got all of these highly refined machines/algorithms doing specific tasks for us extremely well. Humans need to do less and less menial work to the point where it opens up significant opportunities to do what AI won’t be able to do for a long time; creative, flexible work. Every human is creative and multifaceted, but we can’t use that potential effectively if we’re forced to clean floors or work the cash registers for the majority of our lives. Every AI breakthrough enables us to move up in the world.

The same thing happened when the sewing machine was invented; perhaps people in the textile industry lost their jobs hand sewing clothing, but those same people could be reassigned to other high-growth areas of the textile industry, increasing productivity. And also with the Internet; we buy fewer newspapers and mail fewer letters, but thousands of new careers emerged such as web designers, data scientists and even bloggers [10].

Perhaps the biggest shame is that some skills will inevitably be unnecessary in the future. However, the solution to this shouldn’t be to hamper technological progress/productivity in order to keep your job; it should be to be constantly learning and adapting to the new world.

So don’t worry about extinction or unemployment. It’s either a long way away or not as bad as you think. At the moment, AI is great at a select few areas (like pattern recognition and learning) but not so great at all the other components of intelligence. If there’s one thing we know, it’s that we need to know far more about intelligence in the human form through fields like psychology and neuroscience, before we can think about successfully replicating it in digital form. At the moment, AI isn’t necessarily in its infancy, but perhaps it’s just on its way to hitting puberty.

Sources

- https://www.britannica.com/technology/artificial-intelligence

- http://www.allthingsinteractive.com/new-blog/2016/12/3/three-types-of-learning-that-artificial-intelligence-ai-does

- https://pathmind.com/wiki/neural-network

- https://towardsdatascience.com/whats-next-for-ai-enter-deep-reasoning-fae8b131962a

- https://towardsdatascience.com/deep-learning-approaches-to-understand-human-reasoning-46f1805d454d

- https://www.ritsumeihuman.com/en/essay/the-problem-solving-ability-of-human-and-artificial-intelligence/

- https://cis.temple.edu/~wangp/3203-AI/Lecture/IO-2.htm

- https://becominghuman.ai/a-simple-introduction-to-natural-language-processing-ea66a1747b32

- http://openworm.org/

- https://singularityhub.com/2019/01/01/ai-will-create-millions-more-jobs-than-it-will-destroy-heres-how/

Cover photo by Andy Kelly on Unsplash